Author: L.S.Lowe. File: raidperf20. Original version, tests performed: 20101018. This minor update: 20120426. Part of Guide to the Local System.

The RAID for this series of tests is an Infortrend A24S-G2130, equipped with 24 Hitachi A7K2000 disks (2TB, SATA, 7.2k, HUA722020ALA330), and 1 GB of RAM buffer memory, set up with 2 raidsets of 12 disks each configured as RAID6, and so with the data equivalent of 10 disks each. The RAID stripe size was kept at the factory default: 128 kB.The A24S-G2130 RAID controller comes with 4 multi-lane 12Gbps host ports (quoting the Infortrend brochure). The RAID was attached to a Dell R410 server via a LSI SAS3801E host bus adapter card. The server was equipped with two Intel E5520 quad processors and 12GB of RAM. This is a summary graph of the bonnie++ results, below:

# time mkfs -t ext4 -E stride=32,stripe-width=320 -i 65536 /dev/sd$dv .... real 3m4.054s user 0m2.823s sys 0m32.838s # tune2fs -c 0 -i 0 -r 1024000 -L $fs /dev/sd$dv

The xfs file-system was formatted on a full 20TB raidset as follows:

# time mkfs.xfs -d su=128k,sw=10 -L $fs /dev/sd$dv

meta-data=/dev/sdb isize=256 agcount=19, agsize=268435424 blks

= sectsz=512 attr=2

data = bsize=4096 blocks=4883118080, imaxpct=5

= sunit=32 swidth=320 blks

naming =version 2 bsize=4096 ascii-ci=0

log =internal log bsize=4096 blocks=521728, version=2

= sectsz=512 sunit=32 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

real 0m14.011s

user 0m0.000s

sys 0m0.285s

I didn't want to disturb the logical drive RAIDsets of the RAID, which remained at 20TB each. But mkfs.ext4 didn't make it easy for me, even when I specified an extra parameter of block-count to keep the size within 16TiB, because it checked and complained about the size of the whole device first!

I then thought to make a GPT partition table and partition using

parted devicefile mklabel gpt

parted devicefile mkpart 2560s 16TiB

Note the use of 2560 sectors as the start offset of the partition,

which is intended to cause data stripes written to remain

optimally aligned with the RAID, otherwise performance can suffer.

(This is irrespective of filesystem type:

for example see this discussion of

stripe/partition alignment when using the XFS filesystem).

The use of 16TiB as the end offset ensured that

the size was just less than 16TiB.

Later that day I decided not to use a GPT partition, but instead to firmware-partition the RAID device into a 17.6 TB lun (16777000 MiB, just under 16 TiB), with the remaining 2.4 TB in another lun. This will make expansion easier once e2fsprogs is finally enhanced.

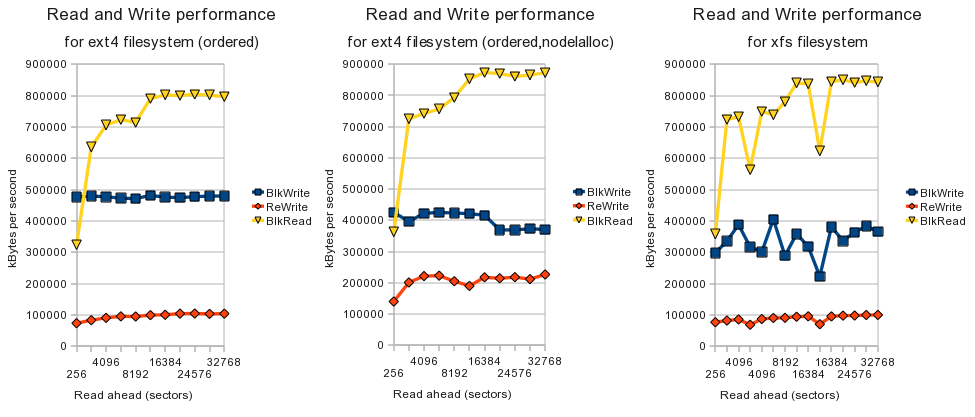

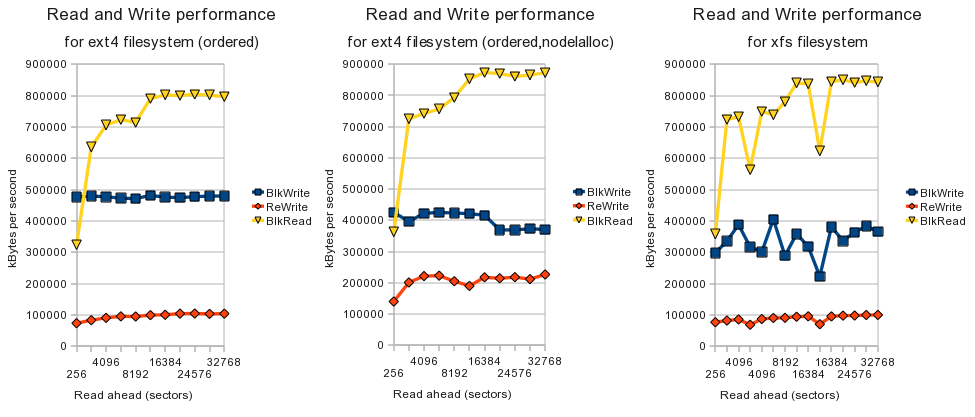

The read-ahead was set to various values using the blockdev command (ra=4096 means read-ahead of 4096 sectors), and the file-system was mounted with ext4 default options, and with ext4 -o nodelalloc as well, and the xfs file-system was tested. Some tests for the same setup were repeated to get an idea of consistency. The kernel in use was 2.6.32.21-168.fc12.x86_64 in Fedora 12. Bonnie speed results are in kiBytes/second for I/O rates, and number per second for file creation and deletion rates.

| Setup | Size:chk | chrW | %c | BlkW | %c | ReW | %c | chrR | %c | blkR | %c | Seek | %c | Create/Erase | SeqCre | %c | SeqRead | %c | SeqDel | %c | RanCre | %c | RanRd | %c | RanDel | %c |

| ext4 default | ||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ra=256,20b-ext4o | 24G:128k | 81175 | 99 | 476490 | 60 | 73566 | 8 | 71794 | 96 | 323996 | 16 | 223.1 | 1 | 256:1000:1000/256 | 43651 | 97 | 108795 | 86 | 71483 | 93 | 42360 | 97 | 27168 | 27 | 15992 | 36 |

| ra=2048,20b-ext4o | 24G:128k | 88196 | 99 | 479504 | 61 | 83210 | 9 | 76858 | 98 | 635524 | 29 | 228.8 | 1 | 256:1000:1000/256 | 31881 | 90 | 114230 | 85 | 68843 | 93 | 41144 | 97 | 27751 | 28 | 15649 | 36 |

| ra=4096,20b-ext4o | 24G:128k | 88279 | 99 | 476937 | 59 | 90695 | 9 | 78915 | 99 | 705441 | 31 | 222.0 | 1 | 256:1000:1000/256 | 32127 | 89 | 104989 | 86 | 68991 | 93 | 41420 | 97 | 27139 | 27 | 16087 | 36 |

| ra=4096,20b-ext4o | 24G:128k | 85165 | 99 | 472066 | 57 | 91014 | 9 | 78508 | 99 | 693976 | 33 | 224.4 | 1 | 256:1000:1000/256 | 43975 | 98 | 111441 | 85 | 65327 | 94 | 40149 | 97 | 27186 | 27 | 16104 | 36 |

| ra=6144,20b-ext4o | 24G:128k | 88339 | 99 | 472704 | 60 | 95358 | 10 | 79799 | 99 | 723186 | 34 | 222.7 | 1 | 256:1000:1000/256 | 30982 | 90 | 118095 | 90 | 61571 | 93 | 41778 | 97 | 27127 | 29 | 15873 | 38 |

| ra=8192,20b-ext4o | 24G:128k | 82929 | 99 | 471103 | 59 | 94954 | 10 | 79310 | 99 | 713955 | 33 | 222.2 | 1 | 256:1000:1000/256 | 32367 | 88 | 123535 | 89 | 72418 | 93 | 43455 | 97 | 27503 | 27 | 16304 | 36 |

| ra=12288,20b-ext4o | 24G:128k | 87872 | 99 | 481416 | 59 | 99325 | 11 | 79504 | 99 | 790598 | 37 | 232.4 | 1 | 256:1000:1000/256 | 31389 | 91 | 114661 | 86 | 69301 | 93 | 41207 | 96 | 29078 | 29 | 15699 | 36 |

| ra=16384,20b-ext4o | 24G:128k | 82989 | 99 | 478670 | 60 | 103795 | 11 | 82446 | 99 | 807280 | 35 | 222.2 | 2 | 256:1000:1000/256 | 32922 | 89 | 112688 | 90 | 70256 | 93 | 38772 | 95 | 27280 | 28 | 15875 | 38 |

| ra=16384,20b-ext4o | 24G:128k | 85754 | 99 | 475715 | 60 | 100568 | 11 | 82046 | 99 | 801717 | 35 | 223.3 | 2 | 256:1000:1000/256 | 30922 | 89 | 117835 | 90 | 65671 | 93 | 41589 | 97 | 27223 | 27 | 16012 | 36 |

| ra=20480,20b-ext4o | 24G:128k | 84357 | 99 | 474338 | 59 | 104198 | 11 | 77405 | 99 | 800019 | 38 | 222.0 | 2 | 256:1000:1000/256 | 31826 | 90 | 117924 | 90 | 61777 | 94 | 42370 | 97 | 26242 | 27 | 16440 | 38 |

| ra=24576,20b-ext4o | 24G:128k | 88202 | 99 | 477174 | 58 | 104481 | 11 | 80276 | 99 | 804058 | 36 | 222.3 | 1 | 256:1000:1000/256 | 31665 | 89 | 120075 | 89 | 62905 | 94 | 41659 | 97 | 27206 | 29 | 15624 | 37 |

| ra=28672,20b-ext4o | 24G:128k | 79100 | 99 | 479355 | 58 | 103440 | 11 | 82380 | 99 | 801705 | 35 | 227.3 | 2 | 256:1000:1000/256 | 32376 | 91 | 113255 | 83 | 71579 | 93 | 41143 | 97 | 28204 | 33 | 15487 | 39 |

| ra=32768,20b-ext4o | 24G:128k | 87624 | 99 | 479255 | 59 | 104190 | 11 | 80927 | 99 | 796217 | 34 | 223.2 | 2 | 256:1000:1000/256 | 30100 | 88 | 128455 | 95 | 68130 | 94 | 42747 | 97 | 27286 | 27 | 16037 | 37 |

| ext4 with nodelalloc option | ||||||||||||||||||||||||||

| ra=256,20b-ext4o-n | 24G:128k | 79649 | 99 | 425484 | 85 | 140274 | 17 | 80440 | 98 | 363111 | 18 | 213.9 | 1 | 256:1000:1000/256 | 35441 | 96 | 90431 | 73 | 48538 | 95 | 29750 | 82 | 24998 | 27 | 14899 | 48 |

| ra=2048,20b-ext4o-n | 24G:128k | 79368 | 99 | 396624 | 78 | 201268 | 22 | 81455 | 98 | 724906 | 35 | 215.9 | 1 | 256:1000:1000/256 | 25215 | 85 | 92973 | 76 | 47666 | 95 | 30323 | 82 | 25127 | 27 | 14946 | 48 |

| ra=4096,20b-ext4o-n | 24G:128k | 78046 | 99 | 425547 | 83 | 195908 | 21 | 78120 | 98 | 742222 | 34 | 214.6 | 1 | 256:1000:1000/256 | 25801 | 86 | 84738 | 77 | 48139 | 95 | 29642 | 83 | 25092 | 27 | 14970 | 48 |

| ra=4096,20b-ext4o-n | 24G:128k | 80446 | 99 | 422479 | 84 | 221699 | 24 | 78926 | 98 | 742196 | 34 | 211.8 | 1 | 256:1000:1000/256 | 31716 | 87 | 104644 | 77 | 49312 | 95 | 29711 | 83 | 24884 | 25 | 14957 | 47 |

| ra=6144,20b-ext4o-n | 24G:128k | 82165 | 99 | 425591 | 83 | 223124 | 24 | 80811 | 98 | 756523 | 36 | 216.8 | 1 | 256:1000:1000/256 | 24299 | 86 | 92723 | 81 | 50351 | 95 | 28590 | 83 | 24645 | 26 | 14795 | 49 |

| ra=8192,20b-ext4o-n | 24G:128k | 80847 | 99 | 423526 | 85 | 205077 | 22 | 81274 | 99 | 793426 | 37 | 213.1 | 1 | 256:1000:1000/256 | 26807 | 86 | 98525 | 74 | 49766 | 95 | 30673 | 82 | 25068 | 26 | 14977 | 47 |

| ra=12288,20b-ext4o-n | 24G:128k | 80222 | 99 | 421460 | 85 | 190151 | 21 | 79030 | 99 | 852707 | 39 | 212.6 | 1 | 256:1000:1000/256 | 25322 | 86 | 97086 | 77 | 48005 | 95 | 29201 | 83 | 24894 | 26 | 14852 | 47 |

| ra=16384,20b-ext4o-n | 24G:128k | 80301 | 99 | 421287 | 83 | 212562 | 24 | 78738 | 98 | 881021 | 44 | 214.8 | 1 | 256:1000:1000/256 | 25906 | 87 | 88404 | 76 | 50284 | 95 | 30031 | 82 | 25047 | 26 | 14954 | 46 |

| ra=16384,20b-ext4o-n | 24G:128k | 81411 | 98 | 416509 | 81 | 217728 | 24 | 81333 | 99 | 873385 | 39 | 208.4 | 1 | 256:1000:1000/256 | 26607 | 87 | 92546 | 73 | 49465 | 95 | 27732 | 76 | 27098 | 28 | 14796 | 46 |

| ra=20480,20b-ext4o-n | 24G:128k | 81400 | 99 | 369540 | 75 | 214582 | 23 | 78514 | 99 | 870089 | 38 | 209.2 | 1 | 256:1000:1000/256 | 27278 | 87 | 96293 | 75 | 51805 | 95 | 30645 | 83 | 25119 | 26 | 14858 | 49 |

| ra=24576,20b-ext4o-n | 24G:128k | 77745 | 97 | 369217 | 76 | 218317 | 23 | 79981 | 98 | 861250 | 38 | 210.3 | 1 | 256:1000:1000/256 | 26317 | 87 | 90393 | 78 | 49510 | 95 | 30313 | 82 | 24955 | 26 | 14915 | 47 |

| ra=28672,20b-ext4o-n | 24G:128k | 77815 | 98 | 373675 | 75 | 212021 | 24 | 80703 | 99 | 864974 | 38 | 205.5 | 1 | 256:1000:1000/256 | 25863 | 87 | 100285 | 87 | 51247 | 95 | 30294 | 85 | 26021 | 28 | 14690 | 48 |

| ra=32768,20b-ext4o-n | 24G:128k | 76146 | 99 | 370353 | 77 | 226880 | 25 | 81395 | 98 | 872858 | 38 | 206.7 | 1 | 256:1000:1000/256 | 25608 | 86 | 95173 | 75 | 48072 | 95 | 26183 | 77 | 27206 | 29 | 14792 | 47 |

| xfs | ||||||||||||||||||||||||||

| ra=256,20a-xfs | 24G:128k | 86210 | 99 | 298233 | 27 | 76900 | 8 | 75983 | 98 | 357826 | 19 | 132.2 | 1 | 256:1000:1000/256 | 11875 | 75 | 99642 | 98 | 10360 | 49 | 11352 | 73 | 85324 | 98 | 8285 | 47 |

| ra=2048,20a-xfs | 24G:128k | 85373 | 99 | 335526 | 29 | 82278 | 8 | 76720 | 99 | 723877 | 33 | 128.1 | 1 | 256:1000:1000/256 | 11790 | 72 | 97639 | 98 | 10319 | 50 | 11129 | 72 | 88648 | 98 | 8466 | 50 |

| ra=4096,20a-xfs | 24G:128k | 89683 | 99 | 388150 | 33 | 85687 | 8 | 77111 | 98 | 732134 | 33 | 130.3 | 1 | 256:1000:1000/256 | 11808 | 72 | 101388 | 98 | 10290 | 49 | 11331 | 71 | 95042 | 98 | 8322 | 48 |

| ra=4096,20a-xfs | 24G:128k | 86422 | 99 | 317103 | 27 | 68941 | 6 | 80072 | 98 | 563339 | 25 | 129.1 | 1 | 256:1000:1000/256 | 7958 | 49 | 104911 | 98 | 5094 | 31 | 8043 | 52 | 82245 | 94 | 5745 | 34 |

| ra=4096,20a-xfs | 24G:128k | 88364 | 99 | 300857 | 27 | 87146 | 8 | 78496 | 99 | 749202 | 34 | 136.7 | 1 | 256:1000:1000/256 | 11823 | 70 | 119711 | 98 | 10376 | 48 | 11373 | 71 | 104031 | 98 | 8341 | 45 |

| ra=6144,20a-xfs | 24G:128k | 85686 | 99 | 405204 | 35 | 90438 | 9 | 80001 | 99 | 738214 | 33 | 130.8 | 1 | 256:1000:1000/256 | 11733 | 72 | 99662 | 98 | 10309 | 49 | 11405 | 76 | 75561 | 98 | 8280 | 48 |

| ra=8192,20a-xfs | 24G:128k | 89638 | 99 | 288903 | 26 | 91047 | 9 | 80026 | 99 | 780573 | 34 | 133.5 | 1 | 256:1000:1000/256 | 11759 | 72 | 97683 | 98 | 10348 | 50 | 11285 | 73 | 87694 | 98 | 8270 | 47 |

| ra=12288,20a-xfs | 24G:128k | 88192 | 99 | 358798 | 32 | 94398 | 9 | 81158 | 99 | 840077 | 37 | 154.2 | 1 | 256:1000:1000/256 | 11820 | 72 | 102961 | 98 | 10279 | 48 | 11308 | 74 | 94171 | 98 | 8256 | 47 |

| ra=16384,20a-xfs | 24G:128k | 87618 | 99 | 318582 | 28 | 95768 | 9 | 81210 | 99 | 837252 | 37 | 130.1 | 1 | 256:1000:1000/256 | 11893 | 73 | 96794 | 98 | 10322 | 50 | 11319 | 73 | 87824 | 98 | 8263 | 47 |

| ra=16384,20a-xfs | 24G:128k | 88262 | 99 | 223126 | 19 | 70577 | 7 | 80106 | 98 | 623073 | 28 | 126.3 | 1 | 256:1000:1000/256 | 7991 | 49 | 111657 | 98 | 5073 | 32 | 7932 | 50 | 76352 | 95 | 5219 | 32 |

| ra=16384,20a-xfs | 24G:128k | 89794 | 99 | 381078 | 33 | 95442 | 10 | 76905 | 98 | 844757 | 40 | 132.0 | 1 | 256:1000:1000/256 | 11781 | 70 | 112131 | 98 | 10331 | 48 | 11363 | 71 | 99052 | 98 | 8278 | 46 |

| ra=20480,20a-xfs | 24G:128k | 88497 | 99 | 336654 | 30 | 97998 | 10 | 79165 | 98 | 850002 | 38 | 136.1 | 1 | 256:1000:1000/256 | 11742 | 73 | 102287 | 98 | 10308 | 49 | 11231 | 75 | 93636 | 98 | 8349 | 47 |

| ra=24576,20a-xfs | 24G:128k | 89613 | 99 | 363928 | 31 | 98245 | 10 | 75613 | 97 | 841587 | 38 | 139.1 | 1 | 256:1000:1000/256 | 11760 | 73 | 102786 | 98 | 10308 | 48 | 11311 | 75 | 93892 | 98 | 8387 | 47 |

| ra=28672,20a-xfs | 24G:128k | 89483 | 99 | 383640 | 34 | 99969 | 10 | 76944 | 98 | 847138 | 41 | 142.7 | 1 | 256:1000:1000/256 | 11616 | 73 | 92174 | 98 | 10322 | 50 | 11302 | 68 | 92199 | 98 | 8322 | 48 |

| ra=32768,20a-xfs | 24G:128k | 87983 | 99 | 365695 | 32 | 100214 | 10 | 79970 | 98 | 842895 | 38 | 129.2 | 1 | 256:1000:1000/256 | 11717 | 72 | 101733 | 98 | 10313 | 49 | 11356 | 73 | 86421 | 98 | 8437 | 48 |

Comments: ext4 performance is as good as or better than xfs performance for these tests. A feature of the Read-Write tests (ReW column) is that the performance doubles when the nodelalloc mount option is used.

At the time of writing, ext4/e2fsprogs does not support file-systems bigger than 16TB, which is why, for the ext4 tests above, a firmware-partition of 10TB was used. For production purposes, xfs will initially be used because of the ext4 limitation.

| Type of Iozone test | Write | Rewrite | Read | Reread |

|---|---|---|---|---|

| Throughput test with 1 process: ra=4096,20b-ext4o-nodelalloc | 427428 | 443663 | 619943 | 619656 |

| Throughput test with 1 process: ra=16384,20b-ext4o-nodelalloc | 442429 | 443190 | 720980 | 725769 |

| Throughput test with 1 process: ra=4096,20a-xfs | 513985 | 172980 | 629283 | 624194 |

| Throughput test with 1 process: ra=16384,20a-xfs | 452636 | 171898 | 733350 | 732641 |

L.S.Lowe